In the world of particle physics, scientists work with tiny, invisible particles, tracking their collisions and interactions with one another. We’re used to seeing these interactions depicted in graphs or in computer simulations, and in abstract sets of data. But what if you could render them as music? What would these songs of the subatomic sound like?

Adam Nadel and his exhibit in the Fermilab Art Gallery in 2019.

Adam Nadel, Fermilab’s 2018 artist-in-residence, set out to do just that. He worked with scientists on the MicroBooNE experiment at Fermilab, which uses a 170-ton detector to seek out elusive particles called neutrinos. When neutrinos interact with other particles inside the MicroBooNE detector, those interactions are recorded by three planes of thousands of thin wires. The technology is called a liquid-argon time projection chamber.

For this project, Nadel looked at the output of just one of those wires over about 750 microseconds (or .00075 of a second). In that time, the wire recorded the traces of several particles, including one that turned out to be exactly what MicroBooNE is looking for: a neutrino interaction. He then transcribed the data from that wire onto a musical score for two pianos and a violoncello. Nadel chose the title “Run 3493, Event 41075” for his score, after the catalog numbers of the event in MicroBooNE’s filing system.

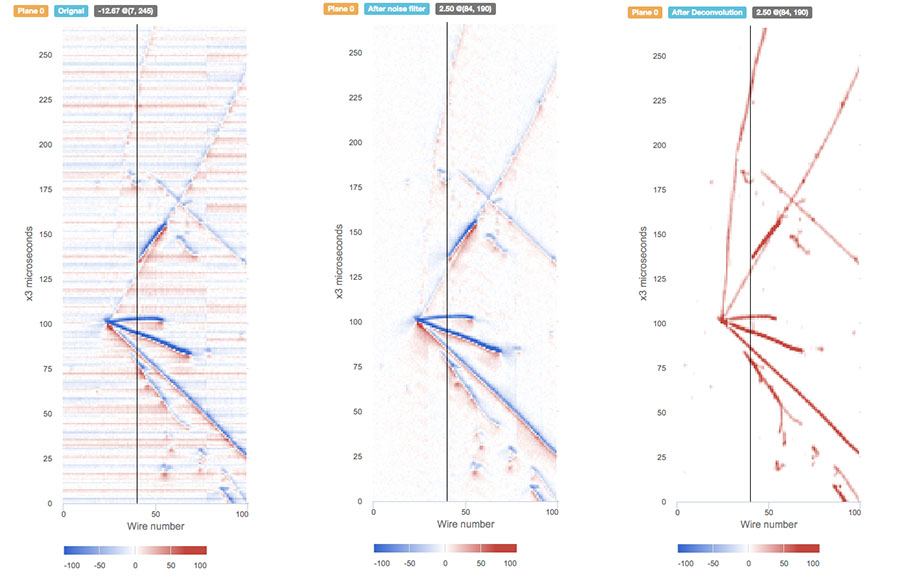

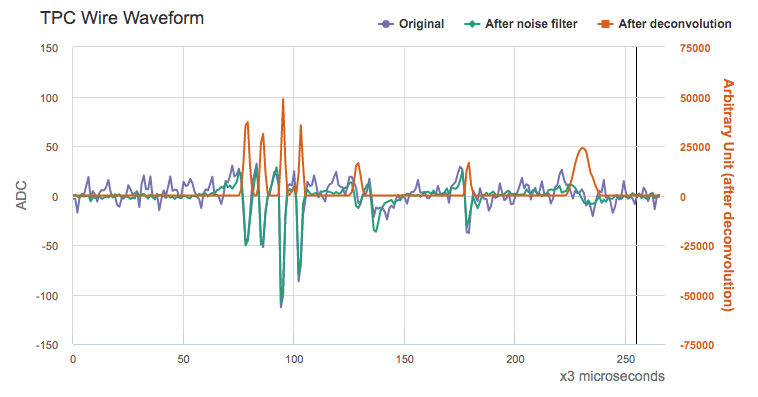

Each instrument is playing a representation of the data at a different stage of the analysis that scientists put it through. The first piano (heard by itself in the first iteration of the piece) is playing the raw data as it comes out of the detector. The second piano (which joins the first at 1:53 min) is playing data that has undergone noise reduction, to remove cosmic rays and other things that are less interesting to neutrino physicists. The second piano part changes a little bit less than the first, since a lot of the stray data has been taken out.

The violoncello, which joins in at 3:39 min, is playing the data after what is called deconvolution, meaning events like the neutrino interaction you can hear at 4:07 min are emphasized. The violoncello part is mostly steady, repeating one note, since the data is clean except for the events scientists are most interested in. The fourth and final iteration begins at 5:28 min, and finds the musicians improvising a little more, making the piece more musical.

Here is the final iteration by itself, in which the musicians bring out the musicality of the piece.

Recorded and supported by the Aaron Copland School of Music, Queens College, and performed by their students:

Mikiko Iwasaki – cello – deconvolution data

Laurence Cummings – piano – Raw data (all piano solos)

Adam Ali – piano – noise reduction data

This is the output of one wire of the MicroBooNE detector over about 750 microseconds, during which the wire recorded the tracks of a particle interaction. Each level of the three stages of noise reduction – raw data, noise filtering and deconvolution – is represented here. This is the data Adam Nadel plotted onto a musical score to create his piece.